Construction and demolition waste accounts for a very large percentage of landfill waste, with estimates ranging in the region of 30-40%. This is an overlooked part of our waste stream. This project was part of a larger body of research of Andrew Witt’s, relating to circular material cycles and waste upcycling. We imagined a near future where a pre-demolition material quantity calculation could be performed quickly with a phone app, scanning and identifying materials on-the-fly, with the expert assistance of a tradesperson who knows building materials, who could tag key parts of images and let the computer do the rest of the heavy lifting.

This was work done for the Harvard Graduate School of Design under Professor Andrew Witt, in collaboration between myself and George Guida. The 3d scan model data was courtesy of Matterport.

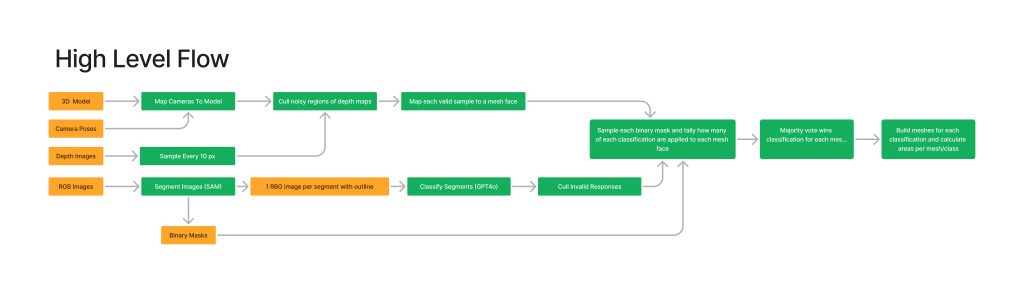

We classified surface materials in 3d scans and calculated material quantities. There are surprisingly few tools that exist for classifying materials in building scans, and those that do exist are often proprietary. Rather than generate our own scans, we used scans provided by Matterport as a testing-ground using real-world data. These scans already came with the original scan photos and meta-data for camera transforms, and the 3d models pre-generated as fragmented meshes. They were not divided by material or use; they were only patched together, for all intents and purposes, randomly. We reconstituted these meshes into a single mesh and imported the camera positions and frames. We then sent each individual scan photo through Meta’s Segment Anything tool, using zero-shot segmentation to produce a large number of individual segments, based on visual characteristics of the image. At this point segmentation was only visual and materials were not considered. We culled these segments, removing small ones that would unnecessarily add to compute time for our classifier.

The classification process worked as follows:

After images were segmented using SAM, individual masked regions were sent to ChatGPT 4o for classification. Below you will find our template prompt:

def build_prompt(is_interior:bool, additional_context:str):

return (

"You are an architect and possess deep knowledge of the built world. You understand how buildings go together and the materials that constitute them."

"Your job is to help your client identify the parts of their building and what they are made of."

f"Attached is an image of {'an interior room' if is_interior else 'a building exterior'}. "

f"{additional_context}"

"There is a single red outline around a segmented region of the image. "

"Your response to me will be in the form of a plus-separated (PSV) line of text with the following format: {description}+{family}+{category}+{material} "

"First, write a text description, of what the region is enclosing."

"Make this as detailed as possible, including the types of things inside the boundary and what materials they are made of. Pay attention to textures as these may provide clues."

"Remember that your description should only describe what is inside the outline, not things that are nearby it."

"Record this in the {description} field of the PSV. "

"Next, based on your text description, determine if this is attached to the building or not. Examples of things that are not attached are furniture and plants."

"Record your answer to this as either 'attached' or 'loose' in the {family} field of the PSV."

"Next, based on your text description, determine which one of the following categories the text description fits in, "

f"only choose one: {category_options if is_interior else ext_category_options}. Do not choose anything that is not on this list."

"Record this in the {category} field of the PSV. "

"Next, based on your original text description, determine which of the following materials the text description fits in, "

f"only choose one: {material_options if is_interior else ext_material_options}. Do not choose anything that is not on this list."

"Record this in the {material} field of the PSV. "

"Remember, do not include any other words/characters in your response except those that fill in the plus-separate line as described above"

)We batched this process and generated a JSON files of the results. You can see an example JSON below:

[{"DepthImgPath":"G:\\My Drive\\GSD_AI\\02_Models\\Matterport\\Matterport_Scans_Imgs\\scans\\17DRP5sb8fy\\undistorted_depth_images\\17DRP5sb8fy\\_\\undistorted_depth_images/902e65564f81489687878425d9b3cb55_d2_2.png","RgbImgPath":"G:\\My Drive\\GSD_AI\\02_Models\\Matterport\\Matterport_Scans_Imgs\\scans\\17DRP5sb8fy\\undistorted_color_images\\17DRP5sb8fy\\_\\undistorted_color_images/902e65564f81489687878425d9b3cb55_i2_2.jpg","SegmentImgPath":"G:\\My Drive\\GSD_AI\\02_Models\\Matterport\\Matterport_Scans_Imgs\\scans\\17DRP5sb8fy\\undistorted_color_images\\17DRP5sb8fy\\_\\undistorted_color_images/Segment/902e65564f81489687878425d9b3cb55_i2_2.jpg_masked.png","MaskImgPath":"G:\\My Drive/GSD_AI/05_Segmentation/17DRP5sb8fy/SAM/902e65564f81489687878425d9b3cb55_i2_2/masks/008.png","OutlineImgPath":"G:\\My Drive/GSD_AI/05_Segmentation/17DRP5sb8fy/SAM/902e65564f81489687878425d9b3cb55_i2_2/outlines/008.jpg","CameraTransformMatrix":[-0.99453,-0.0769623,0.0706153,-10.4303,0.103828,-0.654787,0.748648,2.55374,-0.0113796,0.751885,0.659196,1.54698,0,0,0,1],"FocalLengthXPx":1074.61,"OpticalCenterXPx":630.692,"OpticalCenterYPx":523.124,"ImgWidth":1280,"ImgHeight":1024,"ImgScaleFactor":0.0001,"LabeledMeshIdsFaceIdsWithCt":{"51":{"947":4,"990":21,"991":4,"995":13,"996":13,"1018":1,"1022":3,"1023":3,"1024":16,"1049":1,"1051":7,"1052":22,"1070":3,"1083":2,"1084":9,"1085":5,"1086":8,"1087":1,"1089":3,"1090":11,"1111":1,"1112":3,"1113":6,"1114":2,"1115":4,"1150":2,"1223":1}},"Label":"gypsum","OriginalDescription":"segment of wall with an electrical outlet"}]This classification was then projected back onto the 3d model for each image position, and voting was performed for each mesh face, with the most common classification being the accepted material.

The video below illustrates the process of classification, from initial segmentation of raw scan images to classification to projection of these material classifications back onto the model.